A unique challenge and exciting factor in the role of Technical (or)Solution architect is your daily decisions that you take. While you might not get to design a rocket to Mars, even a small decision you need to take on your project might make a big difference to a wide variety of people. This includes teams involved in product delivery (UX team, development team, testing team), operations team (Handling day-to-day operations and reporting), support team (L1, L2 , L3 team), end users of the product etc. In this article, I will explain a unique scenario (TransactionID association for APIs)and the challenges in our decision-making process.

This post has been cross posted here

Project Background

My team (of 100 + team members) are developing a net banking solution for one of the biggest private-sector banks in India. The solution was developed on top of the experience platform my company has developed. We had around eight squads, with each squad having 3 UI developers, 3 Backend developers, 3 QA, 1 Business Analyst, 1 Solution Architect, and 1 UX. We worked closely with the Bank’s IT and operations teams as part of requirement elicitation, design and testing. Like many other projects in 2024, we are also agile, and the solution is based on microservices architecture. While we were developing net banking, the Bank was creating a mobile app using their in-house engineering team.

Requirement

One essential requirement from the operations team was that a string uniquely identifies every journey and sub-step in a journey. That string is called a Transaction ID. The operations team uses it for various purposes. Key purposes are

- Hourly reporting to the business team on the success rate of various journeys. Top reasons for failure for multiple journeys.

- The L1-L2 team will identify and trace various activities done by a user during a session.

- Configure user-friendly messages for error codes returned for various journeys by backend systems and product processors.

- Blackout or temporarily stopping a user from carrying out specific journeys (due to regulation, supporting system downtime, etc.). For example, in India, new deposits are expected not to be accepted on the financial year closing day of March 31st.

When we started the program, this requirement was unknown to us. So during the initial time before the development kick-off we could not design how to handle it. After a few sprints, the operations team highlighted this in one of the sprint end demo sessions. This expectation/requirement came as a shocker. However, since the management priority is to develop and showcase features that business teams can see and realize the value of the platform my company has sold, efforts to create such common features still need to be prioritized. Only when we reached a point where it could not be delayed further the management took up this in project planning.

Architects discussed various ways to associate different journey steps with a unique identifier as a team. Below are the options we considered

- Have a filter in the backend so that transaction ID can be set in the MDC context based on the URL pattern. This could be further used for logging, observability, reporting, and other purposes.

- Have the UI send the transaction ID in the header for each request.

Below are the various considerations that the Architecture team should consider before making the decision

- Architecture and design fitment.

- Performance and reliability.

- Scalability for new features

- Ease of maintenance and change

A big challenge was that the platform did not offer such capability and could not be enhanced within the project timeline. So, the implementation team needs to implement this as a one-off feature.

UI setting the transaction ID

Architecture and Design Fitment

Every API being invoked should set a value in the header. Setting the transaction ID in the UI is very simple, as there is no complex architectural change. However, this option increases the complexity of the UI architecture of Angular views and components, making the UI logic very complex.

Performance and reliability

Since there is no additional runtime computation, this option does not degrade performance. If a transaction ID is not received for any journey, we know that the issue is only in the place where it is assigned. This makes troubleshooting very easy.

Scalability for new features

As the number of journeys increases, the time to incorporate the transaction ID increases proportionately. Since it depends on development, it also demands clarity on many aspects, like Transaction ID, before the development starts. When the number of stakeholders is high in decision-making (which is true in our case as the operation team needs to decide on Transaction ID based on journey design in both web and mobile channels and finalize the value), this delays the development process. If there is a need to change the transaction ID, the code needs to be changed.

However, this approach allows the backend to scale to new channels quickly. Since the transaction ID is left to the channels in a headless manner or when multiple applications or channels leverage services, the complexity of backend services does not increase.

Ease of Maintenance and Change

If the transaction ID needs to be changed, the front-end code needs to be changed and re-deployed. This makes this option maintenance-unfriendly.

BackEnd Determining the Transaction ID

In this option, a request filter matches the incoming service path against the map (which is cached) of the API path and transaction IDs. Transaction ID for the given API path is retrieved and set in the MDC context.

Architecture and Design Fitment

At the outset, it looks like a natural fit. But below are the challenges

- If the same API is invoked from different journeys and the transaction ID needs to be different, the backend service requires an extra attribute, either as a header or a query parameter. This means that the front-end code still needs to be changed. The key for the Transaction ID will now become API path+ extra attribute (journey name for e,g). That said, the number of front-end code that meets this criterion is around 30% for our application.

- Many APIs have dynamic values in path parameters. How do we get a pattern from an incoming API path? This is challenging, and we were not able to find a foolproof solution.

- Per microservice standards, the same API can have different implementations for GET, PUT, POST, and DELETE. However, transaction IDs need to be different. This means that in addition to the API Path and journey name, the HTTP Method will also be part of the key.

As you observe, key generation and determination become very complex as the application and journey become complex.

Performance and reliability

Whenever a request is received, a small computation is done to determine the transaction ID. This adds to the latency (even if it is less than ten milliseconds) and is also a point of failure. When the transaction ID is not set, troubleshooting becomes complex.

Scalability to new features

Whenever new features or journeys are added, the only change is to update the configurations, which maintain the mapping between API path, method, journey name, and transaction ID. This makes it very easy and scalable for new features.

Ease of Maintenance and Change

If any change is required for the transaction IDs, no code change is involved. Only configuration changes are required.

Final Decision

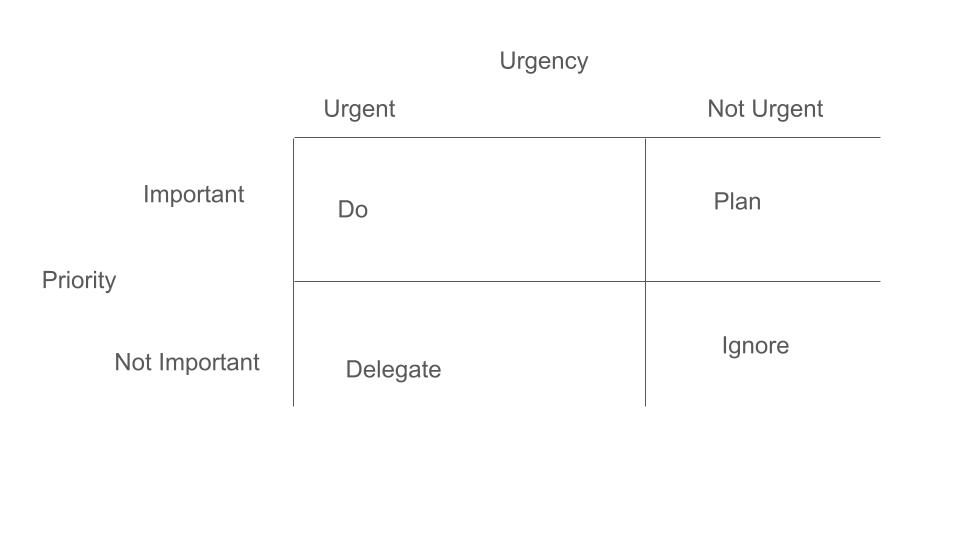

As you might see, each option is drastically different, with its strengths and weaknesses. The impact of each strength and weakness is very high, making decision-making very difficult. We also need to take into account the stage of the project and the changes that are required for the developed epics.

We assumed that transaction IDs would be mostly the same, approved it with the operations and business teams, and decided to use the front-end option. Changing the functionalities already delivered had a short-term impact, and every journey developed after that undergoes a painful and time-sensitive discussion on transaction IDs. However, the simplicity of architecture and implementation outweighed the ease of maintenance and flexibility.